Imagine teaching a curious traveler to navigate a vast, unfamiliar city. There are no signboards, no prior maps, and no one to guide them. They do not start with knowledge of the best routes. Instead, they rely on trial, error, and memory. Every path they walk becomes a lesson. Every success becomes a reward. Every mistake becomes a quiet reminder for the next decision. This is the essence of Reinforcement Learning, where an agent interacts with an environment to learn how to make better choices over time. The city is the environment, and the traveler is the learner, growing wiser with every step.

Learning Through Interaction: The Foundation of Reinforcement Learning

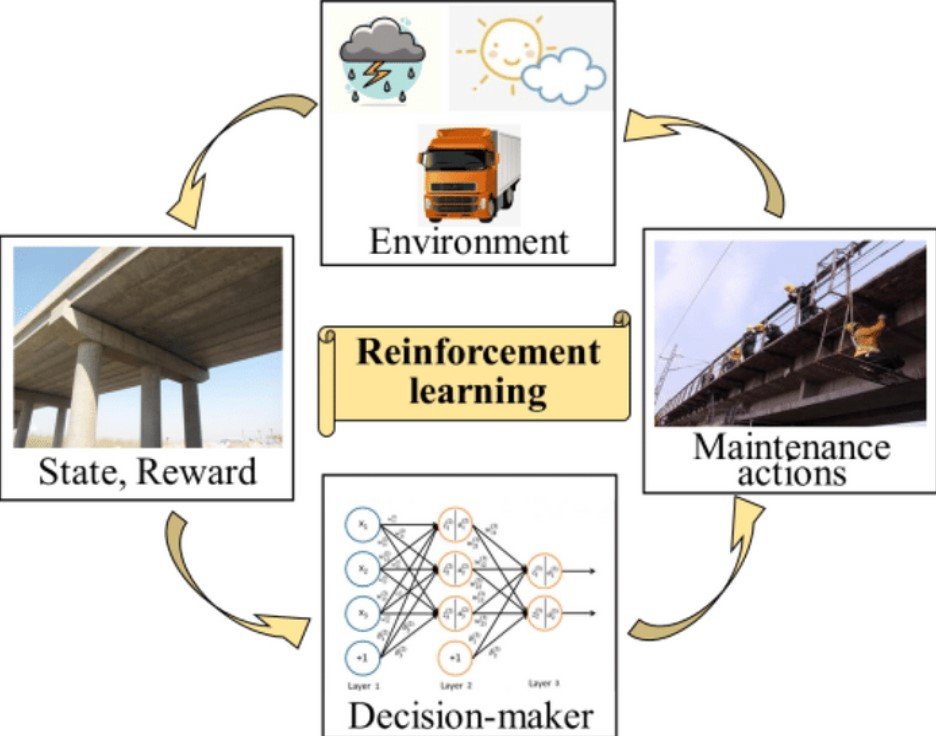

Reinforcement Learning (RL) is built around the idea of learning by doing. Unlike supervised learning, where a student has the answer key in hand, RL has only a compass pointing toward reward. The agent performs actions, observes outcomes, and measures how rewarding each choice is. Over time, the agent develops a strategy for choosing actions that yield the highest rewards possible.

In many training programs, such as those aligned with the AI course in Pune, learners explore RL as a framework that mirrors human learning itself. Rather than absorbing fixed instructions, both humans and RL agents refine their skills through feedback from the world around them.

Understanding Q-Learning: A Memory of Good Choices

Q-Learning is one of the cornerstone algorithms of Reinforcement Learning. In Q-Learning, the agent tries to estimate a function known as the Q-value, which represents how good a particular action is in a particular situation. Think of Q-values as the traveler’s notebook. Each street and turn is noted, along with whether it led to a lively market or a dead-end alley.

Q-Learning updates its knowledge by repeatedly comparing the predicted value of an action with the reward that actually occurs. If the traveler finds a route that leads to a great outcome, that route receives a higher score in the notebook. If a path leads nowhere useful, its score is reduced. Over countless trials, the notebook becomes more accurate, guiding the traveler toward better decisions.

What is remarkable about Q-Learning is that it does not require knowledge of the environment’s rules. It learns patterns through consistent experience. But it also has limitations. Storing Q-values becomes overwhelming in complex environments, and the method can struggle when decisions are influenced by many subtle variables.

Policy Gradients: Learning the Art of Strategy Directly

While Q-Learning focuses on assigning values to actions, Policy Gradient methods take a different approach. Instead of building a notebook of action values, these methods learn the policy directly. A policy is like a traveler’s instinct. It does not list all possible paths. Instead, it encodes patterns of behavior: “When I see a marketplace, I tend to turn left because that has worked well before.”

Policy Gradients use gradient-based optimization to improve performance. The agent samples actions, observes the rewards, and updates its internal strategy to increase the likelihood of repeating successful behaviors. This approach works especially well in environments where actions are continuous or where the number of possible moves is extremely large. It captures subtlety and nuance, even when the map is too complex to draw.

However, Policy Gradients can be noisy and unstable. Sometimes the learner becomes too confident in a suboptimal strategy. Balancing exploration and refinement is essential to prevent the agent from locking into poor habits.

Exploration vs. Exploitation: The Central Dilemma

At the heart of Reinforcement Learning lies the most human dilemma of all: Should we stick to what we know, or should we try something new?

Exploration is curiosity. It is the traveler who wanders down streets they have never seen before. It may lead to unexpected treasures or wasted time.

Exploitation is experience. It is the traveler who goes straight to the trusted café, knowing it serves good meals.

If an agent explores too much, it may never commit to meaningful progress. If it exploits too early, it may settle for a route that is only average. RL systems must constantly maintain a balanced dance between discovery and habit.

This trade-off is not only theoretical. It appears in business decisions, scientific research, personal learning paths, and modern industrial automation. In many advanced training programs, including those found in the form of an AI course in Pune, this balance is studied carefully to optimize intelligent decision-making in dynamic environments.

Where These Paradigms Come to Life

Reinforcement Learning is not just a laboratory curiosity. It powers high-level robotics, autonomous vehicles, adaptive recommendation engines, intelligent trading algorithms, and game-playing systems that surpass human performance. Each of these real-world applications depends on the ability to refine decisions based on consequences.

The traveler metaphor plays out every day in software systems that learn the best possible strategies for achieving outcomes in shifting, uncertain environments.

Conclusion

Reinforcement Learning is a story about growing wiser through experience. Q-Learning offers memory and structured evaluation. Policy Gradients provide instinctive adaptation. The balance between exploration and exploitation mirrors our own lifelong learning journeys. Whether building autonomous machinery, optimizing decision systems, or modeling intelligent behavior, RL remains a profound field that teaches not just machines, but ourselves, how to learn from the world one step at a time.